The Two Minds of a Machine: A Deep Dive into the Difference Between Weak and Strong AI

The Narrative Hook: The Ghost in the Chess Machine

In 1997, the world watched as a human mind, arguably the greatest of its kind, engaged in a silent war across a sixty-four-square battlefield. On one side sat Garry Kasparov, the reigning world chess champion, a man whose genius was a blend of intuition, creativity, and ferocious intellect. On the other sat Deep Blue, an IBM supercomputer. The air in the room was thick with tension, not just from the match, but from the weight of history. When Kasparov resigned the final game, the moment was monumental. For the first time, a machine had defeated the best human player in a classic tournament format.

This historic event served as a pivot point, raising a question that continues to echo through the halls of technology and philosophy today: Was Deep Blue truly "thinking," or was it just an incredibly powerful calculator, capable of analyzing hundreds of millions of moves per second? Did it understand the game, or was it merely executing a hyper-specialized program with brutal efficiency? This is the fundamental question that separates the two major categories of artificial intelligence.

To understand the future we are building—a world increasingly shaped by algorithms and intelligent systems—we must first grasp the profound difference between the AI we have today, the specialist in a box, and the AI we dream of, the artificial mind.

The Core Concept: The Specialist vs. The Polymath

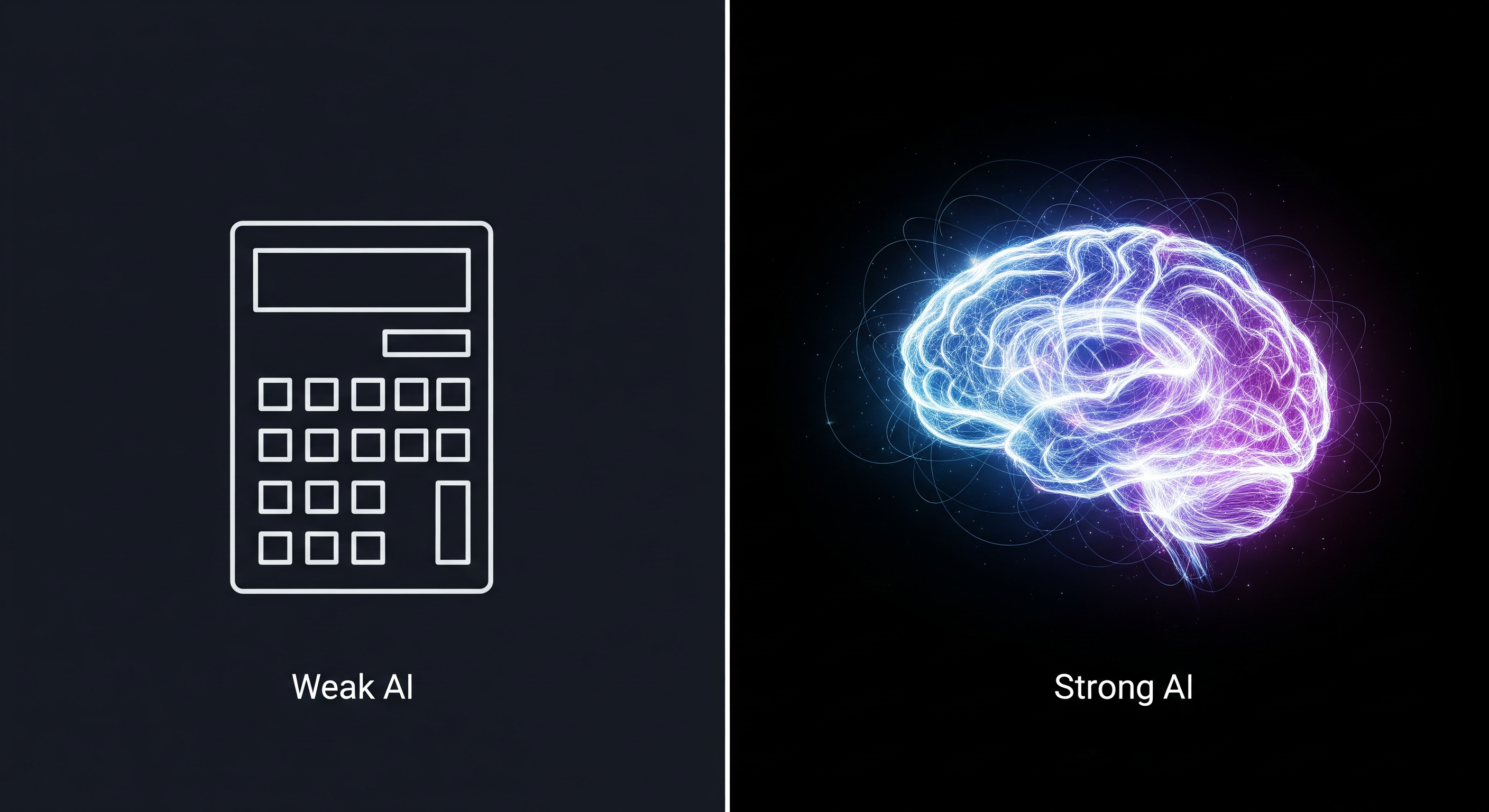

Before we can discuss the future potential of artificial intelligence, it is crucial to understand its most fundamental classification. The terms "Weak AI" and "Strong AI" are not measures of computing power or speed; they refer to the scope and nature of a machine's intelligence. This distinction is the key to separating the reality of AI today from the theoretical possibilities of tomorrow.

So, what is the difference between Weak AI and Strong AI?

- Weak AI (Narrow AI): An artificial intelligence system designed and trained to excel at a single, specific task. It operates within a predefined range and does not possess general cognitive abilities.

- Strong AI (Artificial General Intelligence - AGI): A theoretical system with the general cognitive abilities of a human being. It would be capable of understanding, learning, and applying its knowledge across a wide and unpredictable range of tasks.

A simple analogy can solidify this distinction. Think of Weak AI as a highly specialized tool, like a calculator. It is faster and more accurate at arithmetic than any human alive, but it cannot write a poem, understand a joke, or learn to paint. Its genius is profound but exceptionally narrow. Strong AI, in contrast, would be like a versatile human craftsperson. This craftsperson can learn new skills, adapt to different materials, solve unforeseen problems creatively, and apply knowledge gained from woodworking to a new challenge in metalworking.

Now, let's unpack what these concepts mean in the real world we inhabit and the future we imagine.

The Deep Dive: Exploring the AI Landscape

1. The World of Weak AI: The Specialized Genius All Around Us

Despite its somewhat dismissive name, Weak AI is the most successful and revolutionary form of artificial intelligence to date, its power lying not in breadth, but in a laser-like focus that has allowed it to permeate modern life in ways we rarely notice. This specialization has enabled it to automate and optimize countless aspects of our daily routines.

The Explanation: What It Is

Weak AI, also called Narrow AI or specialized AI, is defined by its limitations. Its core characteristics include:

- Task-Specific: It is developed to handle a single, specific job, whether that's facial recognition, language translation, or playing a game of chess.

- Lack of Generalization: It cannot apply its knowledge outside of its designated domain. An AI that masters chess cannot use that knowledge to drive a car.

- No Consciousness: Critically, Weak AI lacks any self-awareness, genuine understanding, or consciousness. It operates purely on programmed algorithms and learned patterns from the data it's fed.

- Human Assistance: These systems often require human intervention for maintenance, updates, and handling unexpected situations that fall outside their programming.

The two primary types of AI that fall under this umbrella are reactive machines, which can only respond to immediate tasks, and limited memory machines, which can store past knowledge to learn and train for future ones.

The "Real World" Analogy: How to Think About It

Imagine a digital assistant like Siri or Alexa as a brilliant librarian in a very specific, very organized library. This librarian can instantly retrieve any fact within their designated section—weather forecasts, sports scores, appointment reminders. They are impossibly fast and accurate. However, if you ask this librarian for a personal opinion, a creative idea, or knowledge from a different section of the library they weren't trained on, they are completely lost. They are executing a command based on recognizing keywords and patterns, not truly understanding the meaning or context of your request.

The "Zoom In": How It Works

A perfect example is the recommendation system used by services like Netflix or Amazon. When Netflix suggests a new show, it isn't making a creative or empathetic choice. The system is executing a highly refined, multi-step process. It operates on learned patterns by analyzing your viewing history, comparing it to vast datasets from millions of other users with similar tastes, and then executing its predefined algorithm to suggest content that statistically you are likely to enjoy. It is an incredibly efficient pattern-matching process, not a conscious one.

"People have no idea how much of their lives is actually governed by weak AI." — James Rolfsen, Data Analytics Expert

While Weak AI automates the present, Strong AI represents the dream of the future.

2. The Dream of Strong AI: The Quest for an Artificial Mind

Strong AI, or Artificial General Intelligence (AGI), represents the ultimate, though still theoretical, goal of AI research. This is the concept that fuels both our greatest hopes for humanity and our deepest fears about technology. It is the quest to create a machine with an intelligence indistinguishable from—or even superior to—a human mind.

The Explanation: What It Is

Strong AI is defined by its possession of general cognitive abilities and a human-like mind. Key characteristics would include:

- General Intelligence: The ability to understand, learn, and apply knowledge across entirely different contexts, just as humans do.

- Consciousness and Self-Awareness: It would possess self-awareness and consciousness, allowing it to understand and reflect on its own existence.

- Adaptability: It could adapt to new and unfamiliar situations, learning from its experiences in a way that mimics human cognitive processes.

- Autonomy: It could operate independently, make its own decisions, and solve problems without direct human intervention.

- Ethical Reasoning: A true AGI would potentially possess the ability to make ethical and moral decisions.

Crucially, like a human child, this AI would have to learn and develop its abilities over time through input and experiences. We see this concept explored in science fiction with characters like HAL 9000 from 2001: A Space Odyssey or Data from Star Trek: The Next Generation—beings with genuine consciousness and general problem-solving skills.

The "Real World" Analogy: How to Think About It

Imagine a brilliant, multi-talented detective. This detective doesn't just have a database of facts about past crimes (like a Weak AI). They can perceive a new crime scene in its entirety, conceptualize different theories about what happened, recognize when they are facing a novel problem they've never seen before, develop a creative solution to catch the culprit, and learn from the entire experience to become better at solving future, completely unrelated cases. This highlights the adaptability, creativity, and general intelligence that would define Strong AI.

The "Zoom In": How It Would Work

To structure the research into this monumental task, institutions like THWS have proposed design concepts that break down general intelligence into core functional areas it would need to implement:

- Perception: Taking in and processing environmental data.

- Conceptualization: Forming ideas and understanding abstract concepts.

- Problem Recognition: Identifying new and existing challenges.

- Solution Development: Devising novel strategies and answers.

- Learning: Independently acquiring and expanding knowledge.

- Act of Motion/Speech: Interacting with the world.

Achieving this would be a game-changer. An AGI could scan all the world's knowledge to solve pressing problems in medicine, climate change, or physics. However, it also raises significant ethical challenges regarding safety, control, and the potential for bias on a massive scale.

Even if we could build such a machine, how would we know if it was truly thinking?

3. The Consciousness Chasm: Can a Machine Truly Understand?

The biggest hurdle between Weak and Strong AI isn't just a matter of technology; it's a matter of philosophy. The critical debate is whether a machine can ever achieve genuine understanding and consciousness, or if it will only ever be a master of simulation.

The Explanation: The Core Debate

For decades, the classic method for evaluating machine intelligence has been the Turing Test. Developed by Alan Turing in 1950, its setup is simple: a human interrogator engages in a text-based conversation with both a human and a machine. If the interrogator cannot reliably tell which is which, the machine is said to have passed the test.

However, philosopher John Searle presented a powerful critique of this idea with his Chinese Room Argument (CRA). He argued that even if a machine could pass the Turing Test, it would not prove genuine intelligence or understanding.

The "Real World" Analogy: The Chinese Room

Searle's thought experiment asks you to imagine a person who does not speak a word of Chinese locked in a room. Inside the room is a book filled with a complex set of rules. Notes written in Chinese are passed into the room through a slot. The person uses the rulebook to find the incoming Chinese symbols and identify the correct Chinese symbols to write on a new note and pass back out. To an outside observer who speaks Chinese, the room appears to "understand" Chinese perfectly; it gives intelligent, appropriate responses. But the person inside the room has zero actual understanding of the language. They are simply manipulating symbols according to a set of rules.

The "Zoom In": What It Means

In this light, IBM's Deep Blue was the ultimate Chinese Room. It followed the rules of chess with superhuman speed, manipulating the syntax of the game to perfection. But it had zero semantic understanding of what it means to "win" or the beauty of a well-played match. It was a flawless symbol processor, not a thinking opponent.

The Chinese Room Argument highlights this profound difference. The process inside the room—and by extension, the operation of a computer program—is purely syntactical. It is about manipulating symbols based on a formal set of rules. A human mind, however, has semantic content. As Searle himself put it, “Computation is defined purely formally or syntactically, whereas minds have actual mental or semantic contents, and we cannot get from syntactical to the semantic just by having the syntactical operations and nothing else…”

Searle's argument, then, isn't just an academic puzzle; it's a direct challenge to the very soul of the AGI project, forcing us to ask if we're chasing a mind or just a mirror. This philosophical chasm complicates our journey toward AGI and raises the stakes for the next theoretical frontier: superintelligence.

A Tale of Two AIs: A Step-by-Step Scenario

To truly grasp the functional difference, imagine a city facing a sudden, unprecedented environmental crisis: a new, unknown contaminant has appeared in the water supply, and the source is a mystery.

Step 1: The Weak AI's Response A collection of today's most advanced Weak AI systems would be deployed to tackle the problem.

- A large language model like ChatGPT would be tasked with searching its defined dataset (the entire internet) for all known information on water contaminants, treatment methods, and industrial chemicals.

- A data analysis AI would sift through terabytes of existing sensor data from the city's water grid, searching for statistical anomalies or patterns that correlate with the contamination reports.

- A navigation AI like Google Maps would be used to optimize routes for emergency water trucks and direct inspection teams to high-priority areas based on the data patterns.

The limitation is clear: they are all powerful tools operating within their predefined functions. They provide valuable data and perform specific tasks with superhuman efficiency. But they cannot conceptualize a solution to a problem that has never existed before or reason about the novel chemical properties of an unknown contaminant.

Step 2: The Strong AI's Response A theoretical Strong AI (AGI) would approach the same problem holistically and dynamically.

- It would perceive real-time data from every sensor in the city—water, air, traffic, and more—simultaneously.

- It would conceptualize the problem, forming multiple hypotheses about the contaminant's nature and origin by cross-referencing knowledge from chemistry, biology, geology, and city planning.

- It would recognize this as a novel problem requiring a new solution, not one found in its existing data.

- It would develop solutions by running millions of simulations to engineer a unique filtration method tailored to the new substance, or by designing a search pattern for human teams that is based on a complex, evolving model of the situation.

- It would learn continuously from the unfolding event, updating its models in real-time.

- Finally, it would act by communicating its complex reasoning, step-by-step instructions, and confident predictions to human emergency response teams.

This is the difference between syntax and semantics in action. The Weak AIs processed symbols—contaminant data, traffic patterns—while the Strong AI achieved semantic understanding of the crisis itself, allowing it to generate a truly novel solution.

Step 3: The Outcome The contrast is stark. The collection of Weak AIs provides crucial support by executing specialized tasks faster than any human could. The Strong AI, however, demonstrates genuine, generalized problem-solving. It exhibits adaptability, creativity, and a level of understanding that is on par with, or greater than, a team of human experts.

The AI Glossary: An "Explain Like I'm 5" Dictionary

Navigating the world of AI means learning a new vocabulary. Here are some of the most important terms broken down into simple, accessible definitions.

-

Weak AI (Narrow AI): An AI system designed and trained for a specific task or a narrow range of tasks, operating on predefined algorithms without general intelligence or consciousness.

- Think of it as... a master chef who can only cook one perfect dish. They're the best in the world at that one thing, but they can't make you a sandwich.

-

Strong AI (Artificial General Intelligence - AGI): A theoretical form of AI that possesses general cognitive abilities, self-awareness, and consciousness, capable of understanding, learning, and applying knowledge across a wide range of tasks, much like a human being.

- Think of it as... an apprentice who can learn any skill you teach them—cooking, carpentry, painting—and eventually combine those skills to invent something new on their own.

-

Artificial Superintelligence (ASI): A hypothetical type of AI that would surpass human intelligence and ability in practically every way, possessing complete self-awareness and cognitive functions far beyond our own.

- Think of it as... having access to a mind like Albert Einstein's, but one that also understands every other field of human knowledge perfectly and can think a million times faster.

-

Turing Test: A test of a machine's ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. It involves a human evaluator judging conversations between a human and a machine.

- Think of it as... a text-message guessing game. If you're texting with a computer and a person, and you can't tell which is which, the computer passes the test.

-

Chinese Room Argument: A philosophical thought experiment asserting that programming a computer can result in the simulation of understanding, but cannot produce genuine understanding or consciousness, regardless of how intelligently the system behaves.

- Think of it as... using a perfect translation phrasebook. You can use the book to respond flawlessly in a language you don't know, but you still don't actually understand a word you're "saying."

-

Deep Learning: A sub-field of machine learning where artificial neural networks, made up of many layers of models, learn from large amounts of data to identify complex patterns, similar to how a human brain functions.

- Think of it as... showing a toddler thousands of pictures of cats. You don't give them rules for what a cat is; their brain just starts to recognize the patterns on its own. Deep learning does this with data.

Conclusion: The Mirror on the Wall

The distinction between Weak AI and Strong AI is the most important concept in understanding artificial intelligence today. Weak AI is the powerful, task-oriented, and profoundly useful reality that is already reshaping our world. Strong AI remains the ambitious, world-changing, and theoretical goal for tomorrow—a quest to build not just a tool, but a mind.

As we move forward, the key takeaways are clear:

- Scope is Key: The difference is not power, but generality. Weak AI is a specialist; Strong AI is a generalist.

- Consciousness is the Chasm: Today's AI simulates intelligence through sophisticated pattern matching. True AGI would require genuine understanding and self-awareness—a philosophical and technical goal we have not yet achieved.

- The Journey Teaches Us: The pursuit of Strong AI is not just about building better machines.

In the end, the intense effort to replicate human decision-making and interaction in AI is teaching us more than ever about ourselves. By striving to build an artificial mind, we are forced to confront the very nature of our own intelligence—the immense flexibility and contextual understanding that is, as James Rolfsen puts it, "one of the things that makes humans magical beings." The quest for artificial intelligence, it turns out, is the most complex mirror we have ever built. In our struggle to teach a machine what it means to think, we are, for the first time, truly learning to define ourselves.